General

Anycast¶

Anycast is a form of network routing whereby multiple servers share the same IP address, and messages to that IP address are routed to the server that is topographically the closest. Traffic to the IP address is routed according to the routing tables set up in the network's router. Administrators will often configure their routers to point to the geographically nearest server, although the scheme is flexible enough to allow re-routing to a different physical location should the need arise.

flowchart TD

client

router

server

clientNY[client]

routerNY[router]

serverNY[server]

subgraph Chicago

client -->|service-anycast.landon.com| router --> server

end

subgraph New York

clientNY -->|service-anycast.landon.com| routerNY --> serverNY

endAdministrators have the ability to change the routing in Chicago to New York, without needing any changes to the client.

flowchart TD

client

router

clientNY[client]

routerNY[router]

serverNY[server]

subgraph New York

clientNY -->|service-anycast.landon.com| routerNY --> serverNY

end

subgraph Chicago

client -->|service-anycast.landon.com| router --> serverNY

end

Anycast is a popular way to route to DNS servers, as it removes the need for clients to understand where they are geographically, or to maintain a list of appropriate DNS servers. This is maintained by the network administrators via the routing tables.

Multicast¶

Multicast is a routing mechanism that acts similar to a pub-sub topic. The typical IPv4 range is 224.0.0.0/4, which encompasses 224.0.0.0 to 239.255.255.255. Multicast is implemented using the Internet Group Management Protocol (IGMP). Clients must issue an IGMP JOIN command to the nearest switch or router to indicate it wants to be a member of the particular multicast group, identified as one of the aforementioned IP addresses. The router/switch maintains a list of hosts subscribed to each group and forwards the traffic to each IP in the list.

Kernel Routing Tables¶

$ netstat -rn

Kernel IP routing table

Destination Gateway Genmask Flags MSS Window irtt Iface

0.0.0.0 7.151.164.131 0.0.0.0 UG 0 0 0 eno1

7.0.0.0 7.151.164.131 255.0.0.0 UG 0 0 0 eno1

7.151.164.128 0.0.0.0 255.255.255.128 U 0 0 0 eno1

$ ip r s

default via 7.151.164.131 dev eno1 proto static metric 100

7.0.0.0/8 via 7.151.164.131 dev eno1 proto static metric 65024000

7.151.164.128/25 dev eno1 proto kernel scope link src 7.151.164.166 metric 100

7.151.176.0/21 dev ib0 proto kernel scope link src 7.151.181.31 metric 150

DHCP¶

DHCP, or Dynamic Host Configration Protocol, is a protocol used to dynamically assign IP address to hosts within its network. DHCP can also be used to send live kernel images, which is how many netbooting is performed.

sequenceDiagram

Client->>DHCP Server: DISCOVER: Discover all DHCP servers on subnet

DHCP Server-->>Client: OFFER: Server receives ethernet broadcast and offers IP address

Client->>DHCP Server: REQUEST: Client sends REQUEST broadcast on subnet using offered IP.

DHCP Server-->>Client: ACK: Server responds with unicast and ACKs request.DISCOVER¶

Discover messages are sent out on the subnet the host is configured with using the broadcast address specific to the client's subnet. For example, in the case the subnet is located at 192.168.0.0/16, the broadcast address would be 192.168.255.255.

Clients can also request a specific IP address if desired. If not requested, the DHCP server will offer a random address from within its pool.

DECLINE¶

There can be situations in which two clients are errnoneously allocated the same IP address. When a client receives an ACK from its DHCP server, indicating that particular server granted the IP lease, the client should use ARP to discover if any other computer on the subnet is using the same address. In the case the client determines this to be true, it should send a DECLINE broadcast to reneg the lease.

RELEASE¶

When a client is done with an IP address, it can send a RELEASE broadcast to reneg the lease.

Netbooting/PXE¶

DHCP servers can be configured to provide a next-server address, which is provided to the DHCP client when an IP address is given. Netbooting is not strictly part of the DHCP spec, but is rather executed as part of the PXE (Preboot Execution Environment) specification. A PXE boot utilizes the next-server address and filename parameters provided from DHCP that indicates an TFTP server that serves a loadable bootstrap program. When a client receives these parameters, it then initiates a download from the listed TFTP server and loads the bootstrap program. This interaction is usually done entirely in firmware on the NIC.

Arista MetaWatch¶

https://www.arista.com/en/products/7130-meta-watch

Arista is a company that provides various networking solutions. They have developed a switch application called MetaWatch that you can install into your Arista 7130L Series devices. This application allows you to dynamically tap incoming ports in hardware instead of needing to use physical optical taps. The application can be configured to aggregate incoming ports into outgoing ports, which is a form of port multiplexing, AKA link aggregation, AKA port aggregation.

The power of in-hardware tapping is that you can dynamically re-assign where incoming ports are sent to without needing any physical work. The downside is that because the port aggregation/tapping is done in physical computing hardware, you can get into buffer overflow issues and drop packets. In practice this is usually not an issue, but it is a consideration that must be made.

Ethernet¶

Frame Check Sequence (FCS)¶

This is an error-detecting code added to the end of an ethernet frame to detect corruption in the packet. A common implementation for FCS is that the receiver will compute a running sum of the frame, including the trailing FCS. The summation is expected to result in a fixed number, often zero. Another common algorithm is the CRC.

DNS¶

Types of DNS record types

| Name | Description |

|---|---|

| A record | The most common. It maps a name to an IP address |

| AAAA record | Same as A record, but points to an IPv6 address. |

| CNAME | Short for "canonical name," it creates an alias for an A record. Remember, these point to other A records, not to IP addresses. |

| NS | A nameserver record specifies DNS servers for a particular domain. |

| MX record | Mail Exchange records shows where emails for a domain should be routed to. |

| SOA | Start of Authority records stores admin info about a domain. Includes email of admin and other things as well. |

| TXT | A Text record stores arbitrary data |

| SRV | This record stores the IP and port of particular services. |

| CERT | Stores public key certificates. |

| DCHID | Stores information related to DHCP. |

| DNAME | A Delegation Name record allows you to redirect entire subdomains to a new domain. For example, www.example.com is often redirected to example.com in many companies, as the www prefix is often not actually used. |

TCP¶

Keepalive¶

Keepalive is an OS parameter that tells the OS to regularly send empty TCP packets across the network every so often in order to keep the connection alive and prevent network components from expiring the connection.

For example, in the /etc/sysctl.conf file, you can set these parameters:

net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp_keepalive_probes = 9

net.ipv4.tcp_keepalive_intvl = 25

If you find your TCP connections are hanging or being closed unexpectedly, it's possible they are being dropped by NAT or firewall.

Relevant Links:

SSH Optimizations¶

TCP has a common problem in high-latency/lossy network paths where it will spend a lot of time establishing connections, acking packets, and re-sending packets. rsync uses SSH, which uses TCP to send data over the wire. OpenSSH does not provide any means of tuning TCP parameters, but there is an OpenSSH fork called HPN-SSH that provides options for you to tune things like:

- TCP Recieve buffer size

- TCP send buffer size

- TCP window size

These parameters can be increased to provide more tolerance to lossy or high-latency networks. In addition, you might also want to select different SSH ciphers to reduce load on the CPU. Here is a great blog on various benchmarks that were performed on commonly-available ciphers.

Redhat has a blog on how to set buffer sizes.

# sysctl -w net.core.rmem_default=262144

# sysctl -w net.core.wmem_default=262144

# sysctl -w net.core.rmem_max=262144

# sysctl -w net.core.wmem_max=262144

rsync¶

Optimizations¶

rsync relies on SSH, and many optimizations can be applied to it as shown here. This is where most of your performance improvements will come from.

The main method for improving aggregate rsync throughput is to spawn more processes so that more streams are being sent simultaneously. A single rsync process will eventually hit a max throughput, which is limited by TCP handshakes and retransmits. Some optimizations you can do:

- Increase TCP window size (more data sent per round trip)

- Increase TCP send buffer size (kernel parameter)

- Increase TCP receive buffer size (kernel parameter)

OSI Layer 2 Protocols¶

Data Link Layer

LLDP¶

OSI Layer 3 Protocols¶

Network Layer

ICMP¶

Internet Control Message Protocol is used to diagnose issues in a network. The traceroute and ping commands use ICMP.

OSI Layer 4 Protocols¶

Transport Layer

QUIC¶

QUIC is a transport-layer protocol that aims to be effectively equivalent to TCP but with much reduced latency. This is achieved primarily through an abbreviated handshake protocol that only requires 1 round trip, whereas TCP requires 3. It can be thought of as a TCP-like protocol with the efficiencies of UDP.

Congestion control algorithms are handled in userspace instead of kernel space (like TCP) which is claimed to allow the algorithms to rapidly evolve and improve.

TCP¶

UDP¶

OSI Layer 7 Protocols¶

HTTP¶

Obviously this has to be mentioned. It's the most common protocol of them all!

Websocket¶

gRPC¶

Google Remote Procedure Call.

Video Streaming¶

There are various standardized protocols for video streaming.

MPEG-DASH¶

MPEG stands for "Moving Picture Experts Group." DASH stands for "Dynamic Adaptive Streaming over HTTP". This protocol is used by YouTube and Netflix.

Apple HLS¶

HLS stands for "HTTP Live Streaming"

Microsoft Smooth Streaming¶

Seems to be exclusively used by Microsoft's products

Adobe HTTP Dynamic Streaming (HDS)¶

Mainly used for flash.

RDMA¶

Remote Direct Memory Access is a method of direct memory access across the network that does not involve either server's operating system.

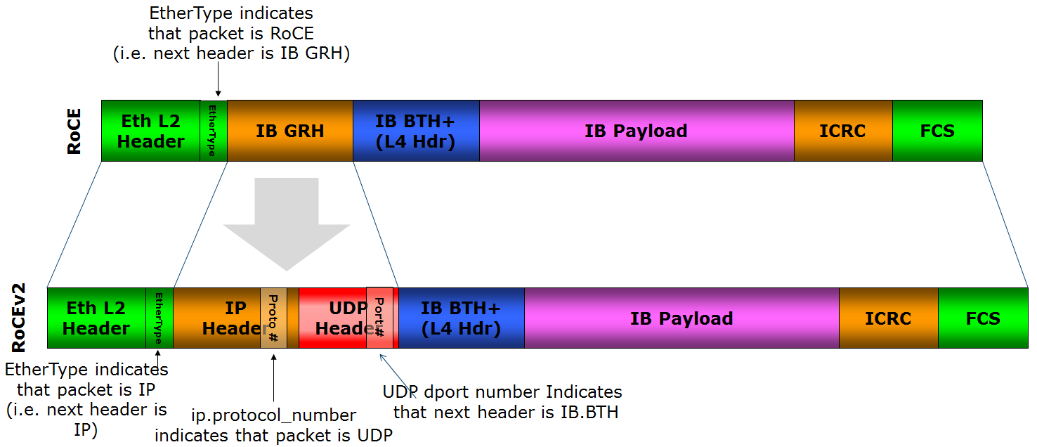

RoCE¶

RDMA over Converged Ethernet is an RDMA implementation over ethernet. It utilizes an Infiniband payload encapsulated by ethernet. RoCE's goal is to port Infiniband's specification of how to do RDMA over an ethernet network. This is why the Infiniband payload is encapsulated as that protocol is still used on the host side to perform the RDMA access.

ARP¶

Address Resolution Protocol is used for mapping MAC addresses to IP addresses. This is often used by routers on a local network to figure out what host (or MAC) has been assigned a specific IP.

MIME Types¶

Media Types (or formerly known as MIME types) are names given to particular message formats. Historically this has been used in HTTP to define what kind of data is in the message body, but it is more broadly applicable to any kind of messaging protocol. The Media type is roughly analagous to a file extension.

Network Namespace¶

Linux allows you to create a "network namespace" that acts kind of like a chroot but for network interfaces. It allows you to isolate a process from the host's network cards and create your own virtualized network topology.

https://medium.com/@tech_18484/how-to-create-network-namespace-in-linux-host-83ad56c4f46f

NCCL¶

NCCL stands for NVIDIA Collective Communication Library. It is a library used in applications that need to do collective, cross-GPU actions. It's topology-aware and allows an abstracted interface to the set of GPUs being used across a cluster system, such that applications don't need to understand where a particular GPU resides.

Load Balancing¶

Layer 2 Direct Server Return (DSR)¶

DSR is a method of load balancing whereby the server sitting behind the load balancer will reply directly to the originating client instead of through the LB. The general flow is as follows:

- Client packets arrive in the load balancer.

- The load balancer makes a decision on which backend to forward to. It will modify the destination MAC address in the ethernet frame to the chosen backend and retransmit the packet to the MAC of its chosen backend (this is critical to make it appear to the layer 2 network that the packet did not originate from the LB).

- The chosen backend receives the packet. Because the layer 3 IP frame was untouched, the packet appears in all respects as if it came from the originating client. Thus, the backend will respond directly to the client IP through the default IP gateway.

It should be noted that, of course, the backends and the load balancer need to be configured with a VIP. When the LB forwards the packet to the backend, the destination IP is unchanged, only the destination MAC. So this means that the LB and the backend services need to be on the same layer 2 network. Because all the backends are configured with the same VIP, they will respond to the LB-forwarded packet.